Last January, a team at the Champalimaud Clinical Centre, in Lisbon, successfully tested the precision of a novel, non-invasive, 100% digital method for locating cancerous breast tumours “on the fly” during surgery. For the first time in operating room settings, a surgeon fitted with an “augmented reality” headset was able to visualise, in real-time, a virtual image of the tumour to be extracted from inside the patient’s body.

Pedro Gouveia – the surgeon from the Breast Unit of the Champalimaud Clinical Centre who performed the surgery – is pioneering digital technology that could usher in a new era for breast cancer surgery, which he calls Breast 4.0. “Today, the technology and the computing power are available to do it”, he says. His ultimate goal is to develop a system that can be integrated into operating rooms. “We are just at the beginning of this transformation”, he adds.

Recognising its potential, today (November 18th), the “Best and Greatest in Portuguese Technology” award in the Innovation category was attributed to the Breast 4.0 project. These awards, which this year also included other categories such as Sustainability, Brands, Computers, and Applications, are an initiative of the Portuguese technology magazine Exame Informática.

But going back to the project itself, what is the current situation in terms of conservative breast cancer surgery? For one, since many breast malignancies are caught in their early stages, they are not palpable. So breast surgeons have to completely rely on images – mammograms, ultrasounds, magnetic resonance (MRI) – to locate the tumours.

This is not simple, because according to the type of image, patients can be either standing or lying down in different positions – and the breast may even have to be compressed (as in mammograms) or will simply be stretched by gravity. It is time-consuming and takes an expert surgeon to interpret the images and to infer, as precisely as possible, the actual location of the tumour from these non-overlapping, deformed views.

Also, prior to surgery, the location of the tumour inferred from the images needs to be printed directly onto the patient to mark the spot where the tumour actually is and to programme the intervention. Various methods, all of them invasive, are used for this, which can cause pain and anxiety to the patients. The method used at the Champalimaud consists of a series of injections of liquid carbon into the breast, with a needle, resulting in a small circular “tattoo” on the skin and on the tumour pointing to the target.

But what if real images of the tumour could be fused together, in spite of the deformations caused on the breast by the way they were obtained, with a personalised 3D model of the surface of the patient’s torso, and then overlaid on the real environment through special transparent goggles? There would be no more need for painstakingly figuring out where the tumour is or for painfully producing markings – which, moreover, are prone to errors.

That’s exactly what augmented reality is about. Remember how, in 2016, the mobile game Pokémon GO sent hundreds of millions of players all over the world hunting for virtual monsters in their surroundings? The game was based on... augmented reality (AR) technology, which overlays computer-generated imagery on real-world environments. “In fact, it all started with Pokémon”, says Gouveia.

The idea of venturing into this technological approach initially came from Maria João Cardoso, who leads the surgical team of the Breast Unit and who is, at the same time, Pedro Gouveia’s PhD supervisor. “Using new artificial intelligence technologies for surgery was always something our group believed in, and Dr. Pedro Gouveia was able to exploit this window of opportunity, always with the maximum potential benefits for the patients in mind”, says Maria João Cardoso.

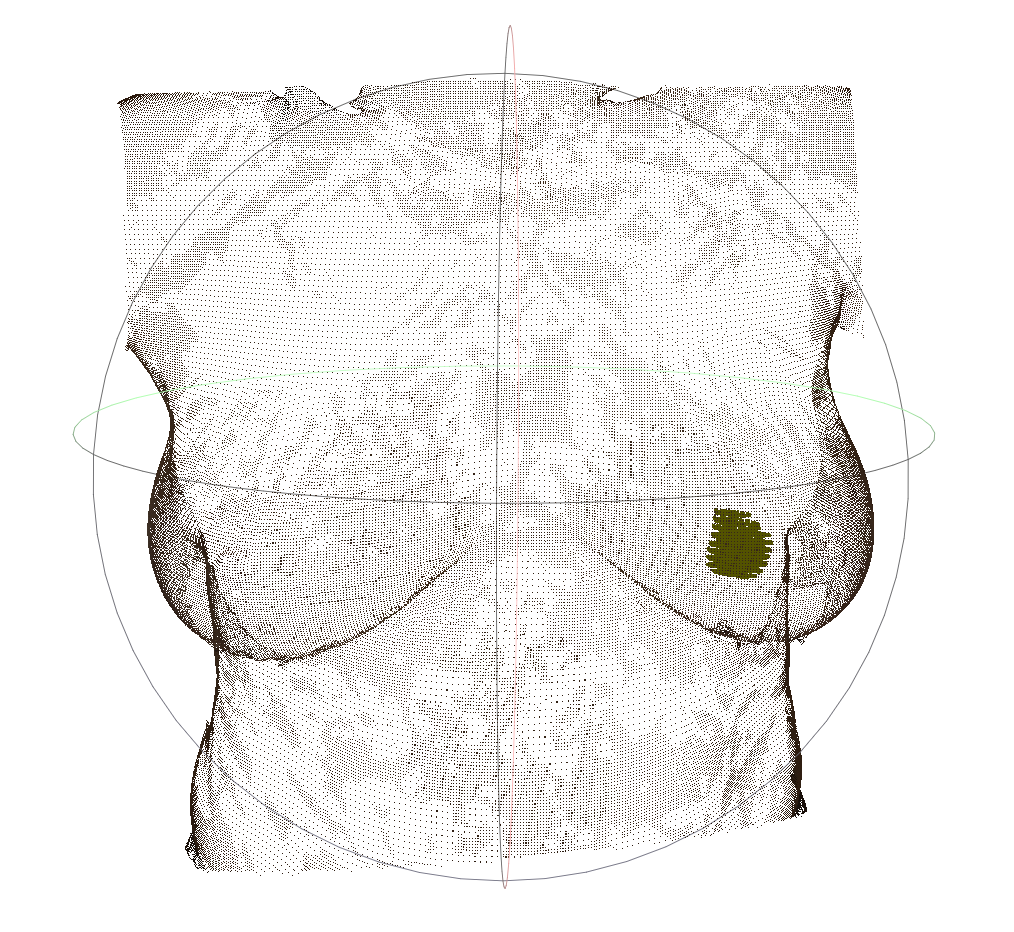

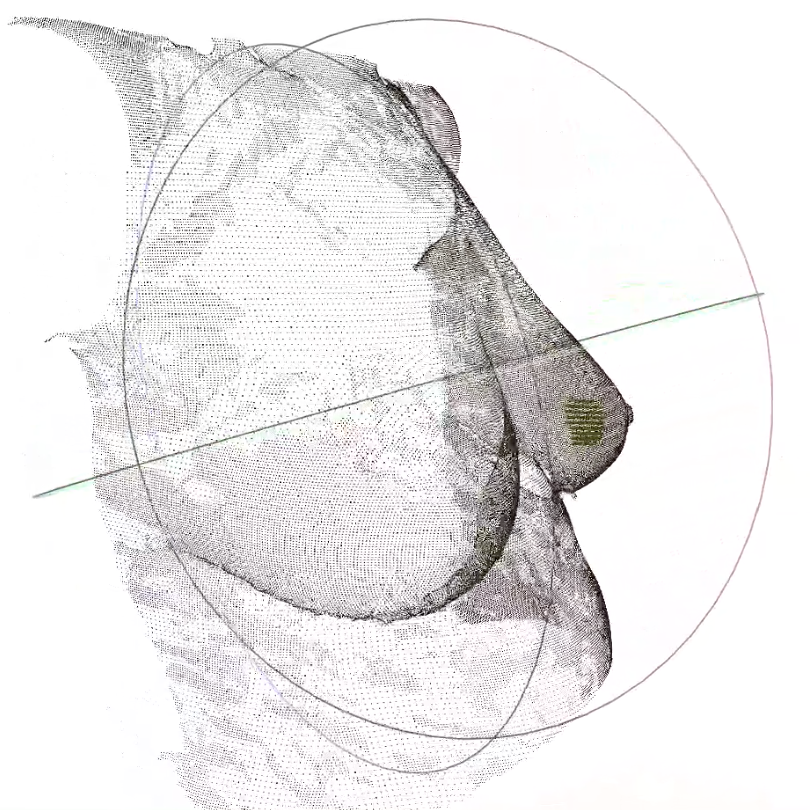

For more than two years, Gouveia has been studying, in collaboration with colleagues at the Champalimaud Centre for the Unknown as well as computer scientists and engineers from INESC TEC and the Portuguese company AI4medimaging, how to fuse MRI images of a patient’s tumour with a 3D surface scan of the patient’s own torso (lying supine) by using special “fusion” algorithms developed by Sílvia Bessa (from INESC TEC) and Pedro Gouveia. “This has been a team effort through and through on the part of the whole Breast Unit, which has also relied on the support of Celeste Alves, head of the Breast Radiology service, and Nickolas Papanikolaou, group leader of the Computational Clinical Imaging Group”, Gouveia points out.

To produce the final fusion image, the scientists start by drawing a set of meaningful dots on the patient’s torso with a permanent marker, outlining the breast. This poses a problem, however: although these dots will appear on the 3D surface scan of the torso, they will not be visible on the MRI images. To solve it, the team has come up with a pretty clever, simple and cheap solution: once the 3D scan is completed, they fix cod liver oil pills over the black marker dots – and since the oil is a liquid, it will be visible on the MRI!

The final image, when uploaded to the augmented reality headset, will superimpose itself on the visible real environment. And when the surgeon looks at the torso of the patient, lying supine on the operating table, the surgeon will see the tumour where it actually is. It was the Portuguese company NextReality that gave support to the team in terms of augmented reality and lending them its Hololens headset.

A similar approach has already been used in neurosurgery, Gouveia points out, but making it work on soft, deformable tissue such as the breast is another matter. Also, the team is set on automating the process so that the 3D body scan of the patient lying on the operating table can be acquired “on the fly”, something that has never been attempted. “In the January surgery, we did everything manually”, says Gouveia. “The digital 3D breast model of the patient took more than seven hours to be produced after registration with a handheld scanner and breast MRI!” Gouveia’s idea for the future is to install various 3D scanners on the operating room ceiling so that the system can work quickly and autonomously.

Before testing their approach during an actual surgery, the team had already performed, between 2017 and 2019, tests of the precision of their experimental method on 16 patients scheduled for surgery by simply comparing the virtual images of their tumours to the corresponding invasive skin markings (which had already been made in preparation for the surgery). The team described this “proof of concept” study - that is, concluded that the approach was feasible - in a paper published in January 2020 in a special issue on Artificial Intelligence in Breast Cancer Care in the journal The Breast.

As far as the real-life test is concerned, “the test during surgery was a success”, says Gouveia. “The position of the virtual image [seen through the headset] matched that of the carbon tattoo on the patient’s skin”. This suggests that the surgeon would have been able to perform the surgery based solely on the digital data – and with the added advantages of having more information about the shape and the boundaries of the tumour.

“But of course, this is still just a prototype”, says Gouveia. There is still a long way to go before the team can turn it into a reliable, fully automated commercial medical device that could be used in hospitals around the world. And, who knows, for other types of cancer.

By Ana Gerschenfeld, Science Writer of the Champalimaud Foundation.